RNA language models developed at UC Berkeley can predict mutations that improve RNA function

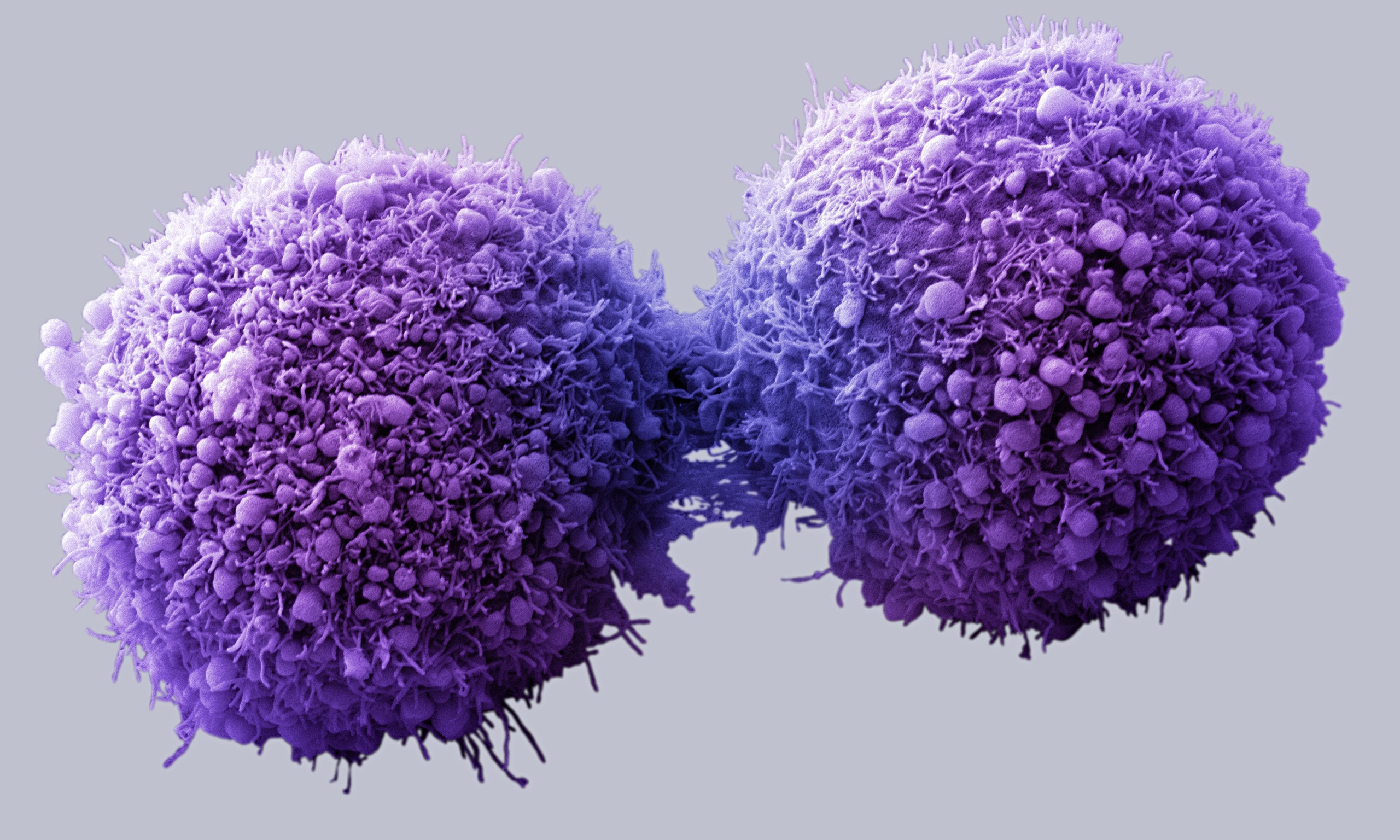

Ribosomes are tiny factories that cells use to make proteins. For years, scientists have looked for ways to engineer these cellular factories to help us make medicines, polymers, or even clean up the environment with bioremediation. In a new preprint, researchers from the Innovative Genomics Institute (IGI), the NSF Center for Genetically Encoded Materials (C-GEM), and from UC Berkeley’s Department of Electrical Engineering and Computer Sciences (EECS) and Center for Computational Biology, led by IGI and C-GEM Investigator Jamie Cate, share deep learning models that bring us closer to using ribosomes as multi-purpose factories.

Ribosomes are made of a combination of RNA, DNA’s single-stranded cousin, and protein. Like DNA, RNA is made up of nucleotide bases represented by four letters. While researchers have made strides in using deep learning to predict protein structures with breakthrough tools like AlphaFold and ESMFold, RNA has received less attention.

With existing sequencing methods, researchers could compare RNA from different organisms and find mutations that might result in different functions, but researchers looking to expand the capabilities of the ribosome could only learn so much from that approach, particularly because the natural variation found in ribosomes is relatively small.

“We reached a limit of what we could do just using those kinds of sequence comparison approaches, so we started thinking about, well, could we apply deep learning approaches to this?” says Cate.

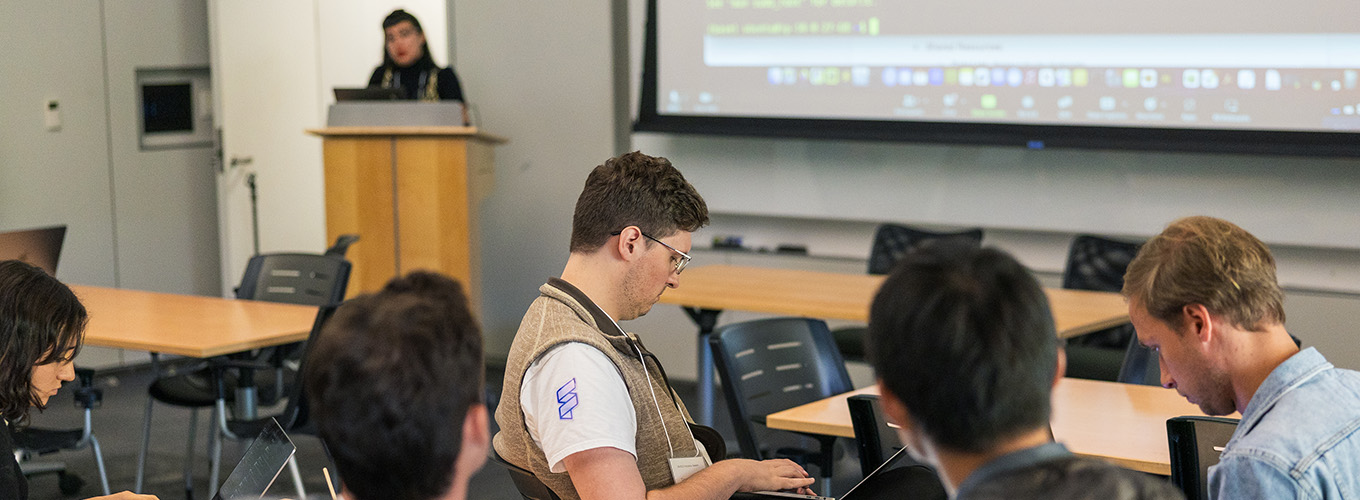

To tackle the problem, Cate busied himself learning the latest developments in AI and neural networks and began experimenting with AI expert Andrej Karpathy’s nanoGPT code on GitHub. Seeing an opportunity to combine the expertise of genomic researchers at the IGI and computer scientists in the EECS department, in the fall of 2023 Cate convened a hackathon with the two groups to start developing tools to apply machine learning to the RNA universe.

Their first accomplishment was putting together a high-quality RNA data set on which to train the deep learning models. Compared to DNA and proteins, data on RNA is relatively scarce, and good models depend on large amounts of high-quality data.

“If you look at similar papers that are trying to solve RNA folding, we all come to the same conclusion that only about a thousand RNAs have high-quality empirical structures. There really just is very little data out there in databases and literature of solved RNA structures, and even less so RNA structures that are matched with phenotypes,” says Marena Trinidad, a bioinformatician in the Doudna lab at the IGI and a first author on the paper.

After comparing multiple approaches, the most successful deep learning model that emerged was a language model, similar to GPT or Llama. In these systems, words — whether in human language or RNA — are converted into tokens that contain high-dimensional information.

“There are other options out there for machine learning, but we chose generative language models,” says Trinidad. “Of course, it would be great to test all possible combinations of mutations, but we physically can’t. The language model gives us results that we can feasibly start running with in the lab.”

The group’s big breakthrough was realizing that instead of looking at individual nucleotide letters, they needed to look at overlapping groups of 3 to get predictive information.

“My interpretation of why it works is that it’s reflecting what’s really going on with RNA structure, which is dependent on how these bases stack on each other,” says Cate. “An RNA sequence is like a stack of plates, so you don’t really want to think about how a single plate is positioned without considering the plates above and below it. And it’s different from proteins because in RNA, the bases, the parts that are in those stacks of plates, they’re the ones that drive the structure.”

Each single nucleotide letter can be surrounded by 16 different combinations of nucleotides directly on either side. By including this information about how the nucleotides are stacked, the model has deeper and more impactful information from which to make predictions. These predictions have been borne out in the laboratory in their initial experiments: the group trained their deep learning models, called Garnet DL, on RNA sequences from thermophiles — microbes that thrive in high-temperature environments — and were able to predict mutations that would increase the stability of the ribosome at higher temperatures.

Both Cate and Trinidad stress how important it was to bring together researchers from both the IGI and the computer sciences and build on their complementary strengths in genomics and machine learning.

“It was very synergistic. I honestly don’t think we could have done the paper without experts on both sides, really being able to figure out what the best approach was for the paper and especially to get across the hurdle of data scarcity,” says Trinidad.

Right now, the group can use Garnet DL to predict how mutations in RNA sequence will affect ribosome structure and function, and they are working on more experimental validations of their findings in the lab. In the future, they hope to expand their work to predict RNA structure and function beyond the ribosome, and to enable researchers to engineer RNA with entirely new, customized functions.

Preprint on biorXiv: RNA language models predict mutations that improve RNA function by Yekaterina Shuglina, Marena Trinidad, Conner Langeberg, Hunter Nisonoff, Seyone Chithrananda, Petr Skopintsev, Amos Nissley, Jaymin Patel, Ron Boger, Honglue Shi, Peter Yoon, Erin Doherty, Tara Pande, Aditya Iyer, Jennifer Doudna, and Jamie Cate.

This work was supported in part by the NSF Center for Genetically Encoded Materials (C-GEM) and the NSF Graduate Research Fellowships Program.

Media contact: Andy Murdock andy.murdock@berkeley.edu

Top photo: Marena Trinidad speaking to the team at the IGI-EECS hackathon. All photos by Andy Murdock, Innovative Genomics Institute.